Measuring power efficiency in datacenter storage is a complex endeavor. A number of factors play a role in assessing individual storage devices or system-level logical storage for power efficiency. Luckily, our SNIA experts make the measuring easier!

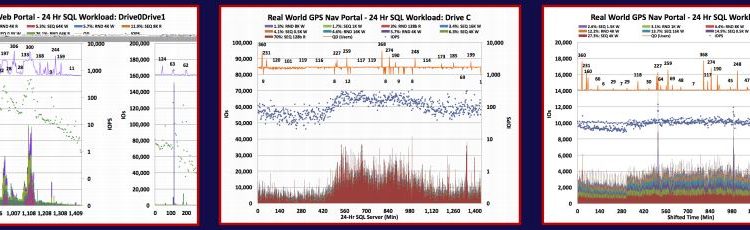

In this SNIA Experts on Data blog series, our experts in the SNIA Solid State Storage Technical Work Group and the SNIA Green Storage Initiative explore factors to consider in power efficiency measurement, including the nature of application workloads, IO streams, and access patterns; the choice of storage products (SSDs, HDDs, cloud storage, and more); the impact of hardware and software components (host bus adapters, drivers, OS layers); and access to read and write caches, CPU and GPU usage, and DRAM utilization.

Join us on our final installment on the journey to better power efficiency – Part 4: Impact of Storage Architectures on Power Efficiency Measurement.

And if you missed our earlier segments, click on the titles to read them: Part 1: Key Issues in Power Efficiency Measurement, Part 2: Impact of Workloads on Power Efficiency Measurement, and Part 3: Traditional Differences in Power Consumption: Hard Disk Drives vs Solid State Drives. Bookmark this blog series and explore the topic further in the SNIA Green Storage Knowledge Center.

Impact of Storage Architectures on Power Efficiency Measurement

Ultimately, the interplay between hardware and software storage architectures can have a substantial impact on power consumption. Optimizing these architectures based on workload characteristics and performance requirements can lead to better power efficiency and overall system performance.

Different hardware and software storage architectures can lead to varying levels of power efficiency. Here’s how they impact power consumption.

Hardware Storage Architectures

- HDDs v SSDs:

Solid State Drives (SSDs) are generally more power-efficient than Hard Disk Drives (HDDs) due to their lack of moving parts and faster access times. SSDs consume less power during both idle and active states. - NVMe® v SATA SSDs:

NVMe (Non-Volatile Memory Express) SSDs often have better power efficiency compared to SATA SSDs. NVMe’s direct connection to the PCIe bus allows for faster data transfers, reducing the time components need to be active and consuming power. NVMe SSDs are also performance optimized for different power states. - Tiered Storage:

Systems that incorporate tiered storage with a combination of SSDs and HDDs optimize power consumption by placing frequently accessed data on SSDs for quicker retrieval and minimizing the power-hungry spinning of HDDs. - RAID Configurations:

Redundant Array of Independent Disks (RAID) setups can affect power efficiency. RAID levels like 0 (striping) and 1 (mirroring) may have different power profiles due to how data is distributed and mirrored across drives.

Software Storage Architectures

- Compression and Deduplication:

Storage systems using compression and deduplication techniques can affect power consumption. Compressing data before storage can reduce the amount of data that needs to be read and written, potentially saving power. - Caching:

Caching mechanisms store frequently accessed data in faster storage layers, such as SSDs. This reduces the need to access power-hungry HDDs or higher-latency storage devices, contributing to better power efficiency. - Data Tiering:

Similar to caching, data tiering involves moving data between different storage tiers based on access patterns. Hot data (frequently accessed) is placed on more power-efficient storage layers. - Virtualization

Virtualized environments can lead to resource contention and inefficiencies that impact power consumption. Proper resource allocation and management are crucial to optimizing power efficiency. - Load Balancing:

In storage clusters, load balancing ensures even distribution of data and workloads. Efficient load balancing prevents overutilization of certain components, helping to distribute power consumption evenly - Thin Provisioning:

Allocating storage on-demand rather than pre-allocating can lead to more efficient use of storage resources, which indirectly affects power efficiency

The

The